The Danger of New Computers and Smartphones with Dedicated AI Chips

The Implications of AI Technologies on Our Daily Lives

Major brands are launching laptops equipped with chips specifically dedicated to artificial intelligence (AI). Recent advancements in this field, with AI assistants like Windows Copilot and the AI features in Apple’s latest Macs, raise serious concerns. Similarly, new smartphones with AI chips present dangers comparable to those of computers, with even more direct impacts on privacy and user control.

1. Enhanced Data Collection

AI chips process vast amounts of data to optimize performance and personalize services, leading to increased surveillance of habits, preferences, and personal information. Smartphones, already tools for massive data collection, are becoming even more intrusive. AI assistants like Siri, Google Assistant, and Samsung Bixby continuously analyze user behavior to improve recommendations. These systems can access sensitive data such as browsing history, GPS location, messages, and even sounds or images captured around the users.

2. Increased Risk of Hacking

With the rise of AI features, smartphones become prime targets for cyberattacks. If a device is hacked, sensitive information such as passwords or banking data can be compromised.

3. Vulnerability to Surveillance

The integration of AI into dedicated chips can make devices more susceptible to being targeted by surveillance systems, whether by governments or hackers. The manipulation of AI data could facilitate the exploitation of the collected information.

4. Less Control Over Data

Users lose control with dedicated AI chips, which make it harder to disable certain data collection functions, thereby limiting privacy protection.

5. Lack of Transparency

Companies like Apple have been criticized for their lack of transparency regarding their data management practices. Users often don’t know how their information is used or shared, especially with integrated AI technologies.

6. Algorithmic Manipulation

Biases present in AI algorithms can lead to subtle manipulation of users through recommendations, advertisements, or content presented based on the exploitation of collected data.

7. Limited Alternatives

The widespread adoption of AI in devices limits privacy-respecting options. It becomes difficult to find alternatives that do not compromise confidentiality, pushing consumers to accept these products despite the risks.

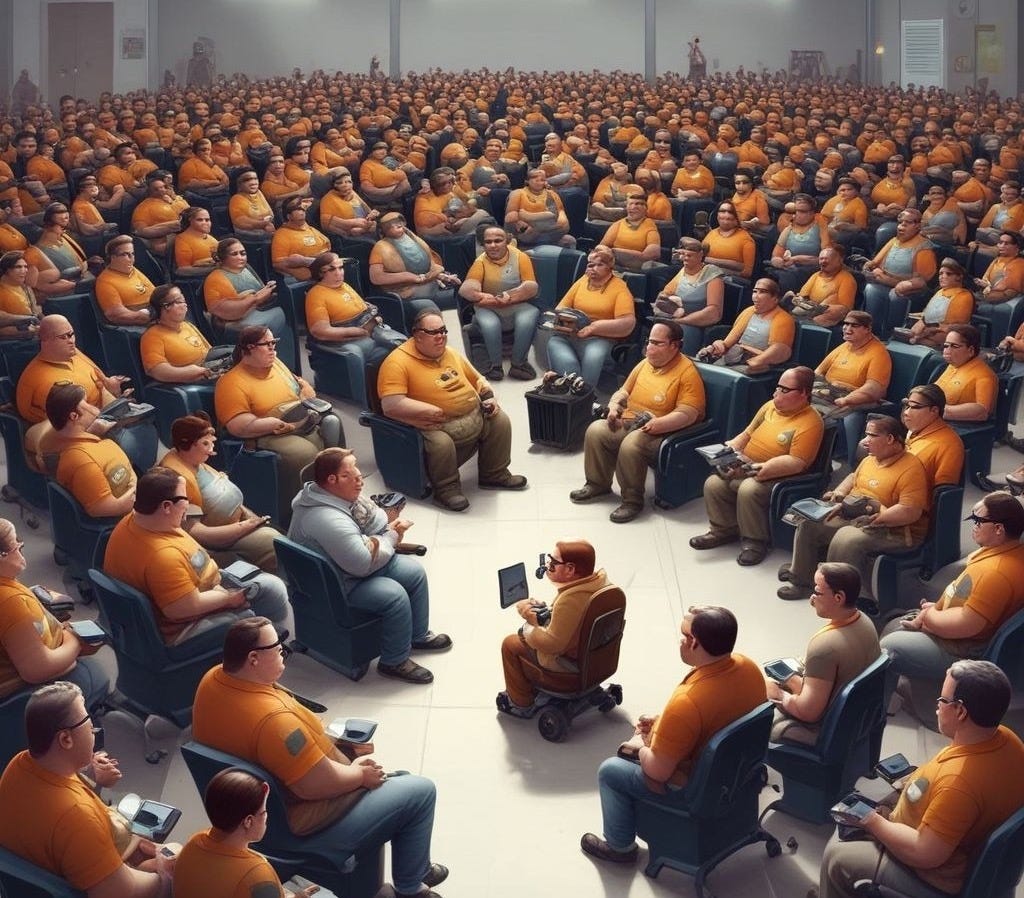

The Comfort of Inaction – A Culture of Passive Acceptance

The convenience and practicality of artificial intelligence (AI) technologies play a crucial role in their adoption, often at the expense of awareness of the associated risks, as demonstrated over the past 20 years, despite revelations from Snowden and other whistleblowers.

AI-powered devices, such as voice assistants, navigation apps, and recommendation systems, simplify daily life. They offer quick and efficient solutions for managing everyday tasks, encouraging users to increasingly rely on these tools without considering their implications. This dependence on convenience can lead to neglecting concerns about data privacy and security.

The ease of use of AI systems contributes to a state of cognitive laziness, where users don’t need to critically reflect on their technological choices. Many feel overwhelmed by the complexity of security issues or the technology itself, preferring to avoid the mental stress associated with these decisions. This results in a lack of education about potential dangers, such as invasive data collection and surveillance, often masked by positive rhetoric surrounding AI.

When users eventually realize the implications of their dependence on AI, it may come as a shock. Realizing that their personal data has been massively collected and exploited without informed consent can lead to feelings of helplessness and betrayal. Studies show that the majority of users are not fully aware of the data they share or the potential consequences of its use.

The passive acceptance of AI-powered technology is also fueled by a culture of speed and immediacy, where users prioritize instant gratification over long-term reflection. This can lead to the normalization of privacy intrusions and the trivialization of data breaches.

Some Solution :

Alternatives for PCs and Laptops

For users concerned about protecting their privacy, exploring alternatives is essential. This includes using alternative operating systems like Linux (Ubuntu, Fedora, Tails, Qubes OS) or BSD (FreeBSD, OpenBSD), designed to enhance security and privacy. Building your own machine according to your needs remains the ideal solution.

Alternatives for Smartphones

Here are the steps to adopt a privacy-respecting smartphone:

Define Your Needs: Identify your needs and find alternatives to standard apps.

Explore Alternative Operating Systems: Look into LineageOS, GrapheneOS, or CalyxOS, systems without Google services.

Choose a Compatible Device: Look for smartphones like Google Pixel or certain Fairphones compatible with these systems.

Check App Availability: Use alternative app stores like F-Droid.

Install and Configure the OS: Set up security and privacy settings.

Educate Yourself on Best Practices: Regularly maintain your system.

A Glimmer of Hope: The Rise of Open-Source AI Against Big Tech Giants

While Big Tech giants like Microsoft, Google, and Apple try to monopolize AI development, an open-source and decentralized AI, developed locally, is gaining momentum. These alternatives, which are more transparent, ethical, and secure, allow users to retain better control over their data.

Initiatives like Hugging Face and Stability AI provide open-source AI models that can be used locally without relying on large companies. These models ensure a more private use, far from centralized servers.

Solutions Already Available:

Hugging Face: This platform offers open-source AI models for natural language processing and other AI applications, without invasive data collection.

Stable Diffusion: An AI image generation model that allows users to create visual content locally without sending personal data to third-party servers.

LangChain and GPT4All: These libraries facilitate the creation of local AI chatbots, ensuring better privacy protection.

LLaMA (Meta): Although developed by Meta, the LLaMA model is available to researchers and developers, allowing them to experiment locally without relying directly on Big Tech infrastructure.

These initiatives show that a more ethical and decentralized AI is possible. The “Freedom Tech” movement, which advocates for local and privacy-respecting solutions, enables users to benefit from technological advancements while protecting their digital freedom.

This movement represents a direct resistance to Big Tech’s monopoly.